What is Docker? Why Should You Use It and How Does It Work?

Developers are always looking for solutions to improve the efficiency and scalability of their work in the ever evolving IT industry. Docker is one such ground-breaking instrument.

Having a solid understanding of Docker can greatly increase your productivity and optimize your workflow, regardless of experience level.

Let's explore Docker's features, operation, and reasons for revolutionizing the software development sector.

What is Docker?

An open-source platform called Docker was created to automate application deployment, scaling, and management.

Put more simply, Docker facilitates the creation, deployment, and operation of programmes that use containers.

Code, libraries, system tools, runtime, and other components required to run a programme are all included in containers, which are executable, lightweight, standalone software packages.

Why Use Docker?

Assume you are a developer working on a project that calls for particular libraries and a particular version of a programming language.

These requirements would need to be manually installed on your computer without Docker, which can be laborious and prone to mistakes.

The classic "works on my machine" dilemma might also arise if other developers are working on the same project and are having trouble simulating the precise environment.

This is resolved by Docker, which packages your application together with its dependencies into a container, guaranteeing that it operates reliably in a variety of contexts, be it a cloud server, a colleague's laptop, or your own computer.

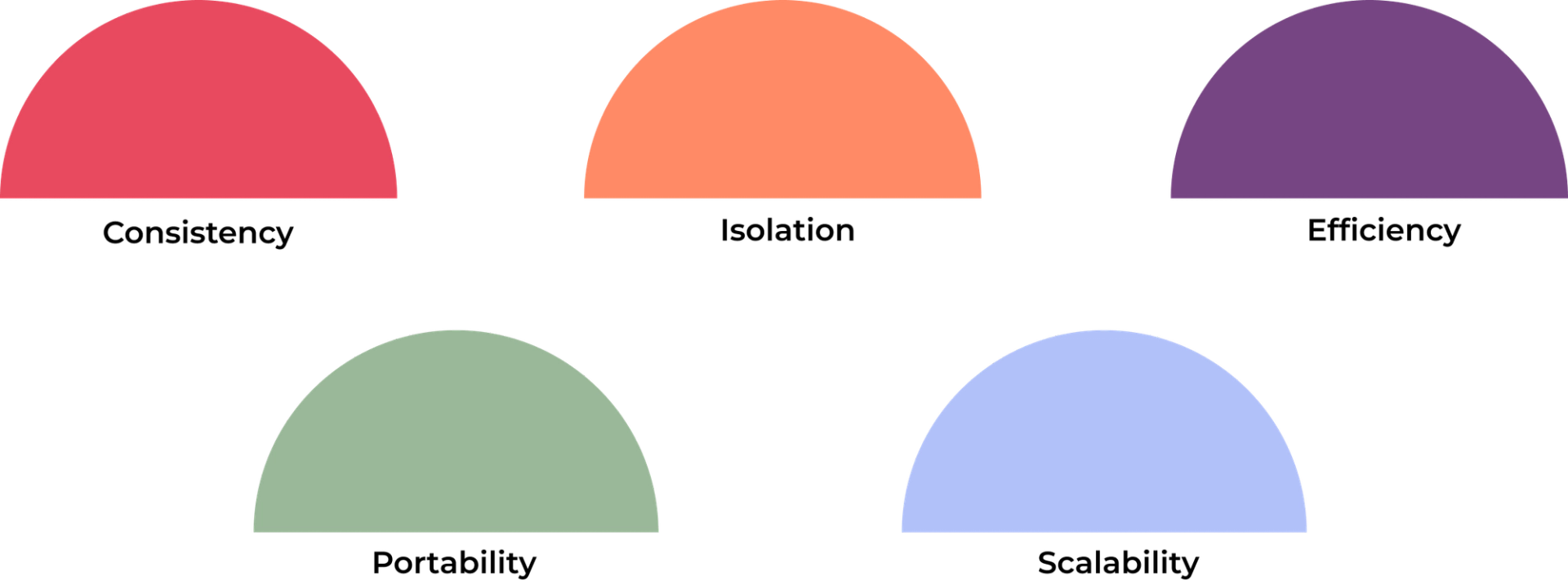

Key Benefits of Docker

- Consistency: Docker makes sure that, while running on several platforms, your application operates consistently.

- Isolation: Because each Docker container operates in a separate, isolated environment, conflicts between programmes are avoided.

- Portability : Docker containers facilitate the seamless transition of programmes between development, testing, and production environments by operating on any system that supports Docker.

- Scalability: Applications may be easily scaled up or down based on demand thanks to Docker.

- Efficiency: Docker containers enable you to execute multiple programmes on the same hardware because they are lightweight and use system resources more efficiently than traditional virtual machines.

How Docker Works

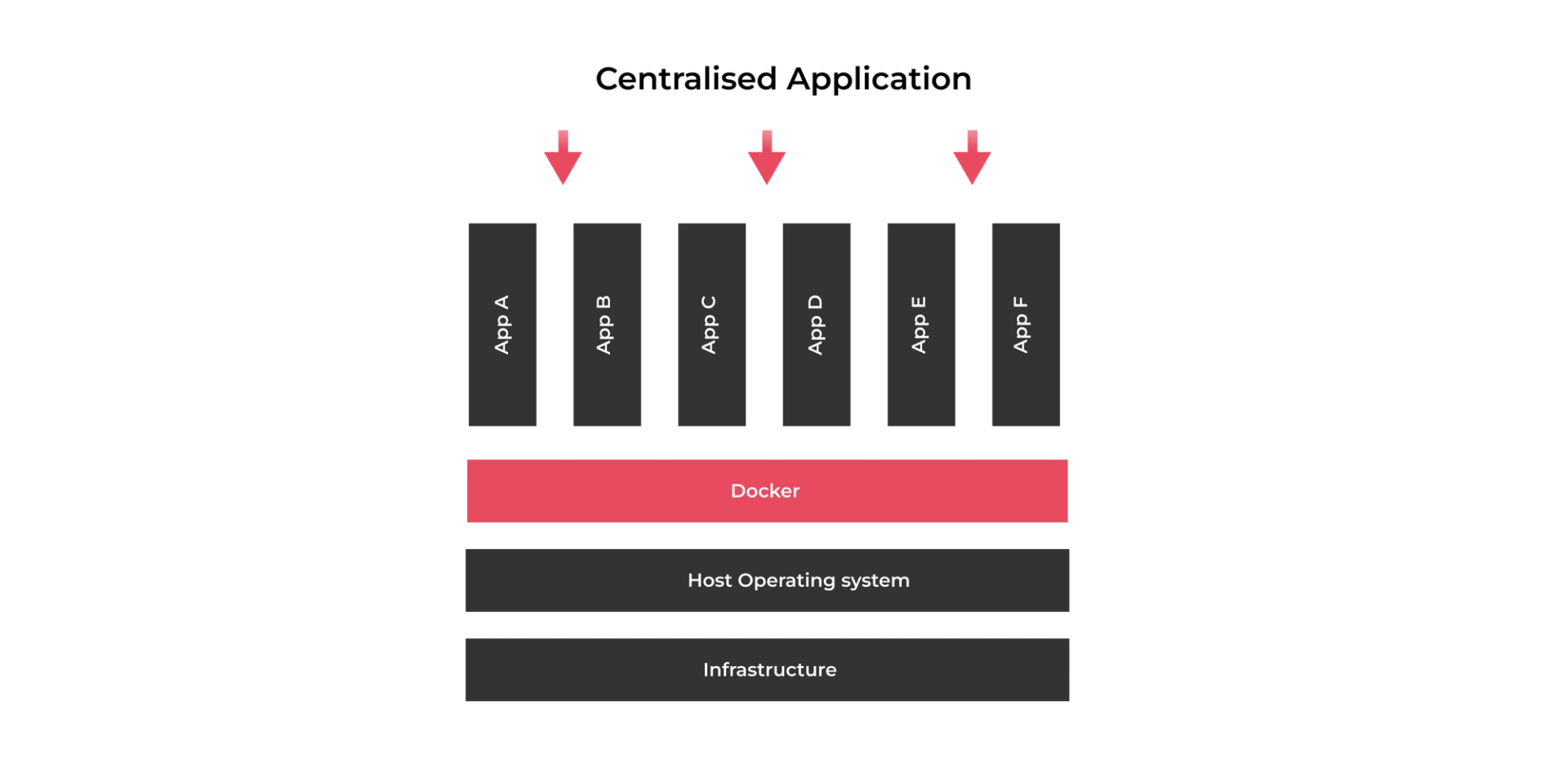

A client-server architecture is used by Docker.

The Docker daemon, which handles the grunt work of creating, launching, and maintaining Docker containers, communicates with the Docker client.

Basic Docker Components

- Docker Image: A container is created using a read-only template. Typically, a Dockerfile—a short script with instructions on how to produce an image—is used to build images.

- Docker Container: an executable version of a picture. With their own filesystem, memory, and network interfaces, containers are separated systems.

- Dockerfile: A text document containing a set of guidelines for creating a Docker image. It details the dependencies, application code, and base image.

- Docker Hub: A cloud-based repository for sharing and storing pictures for Docker users. Finding base images for apps is a common use case for Docker Hub.

Docker vs. Virtual Machines

Within the domains of software development and IT infrastructure, Docker and Virtual Machines (VMs) are two very potent technologies that are highly notable for their capacity to isolate applications and optimize processes.

Although their approaches to achieving their objectives are fundamentally different, they have both revolutionized the way we install and maintain applications. Knowing the differences between virtual machines (VMs) and Docker containers can help you, as a system administrator, developer, or tech enthusiast, make more educated decisions regarding your infrastructure.

CONTAINERS

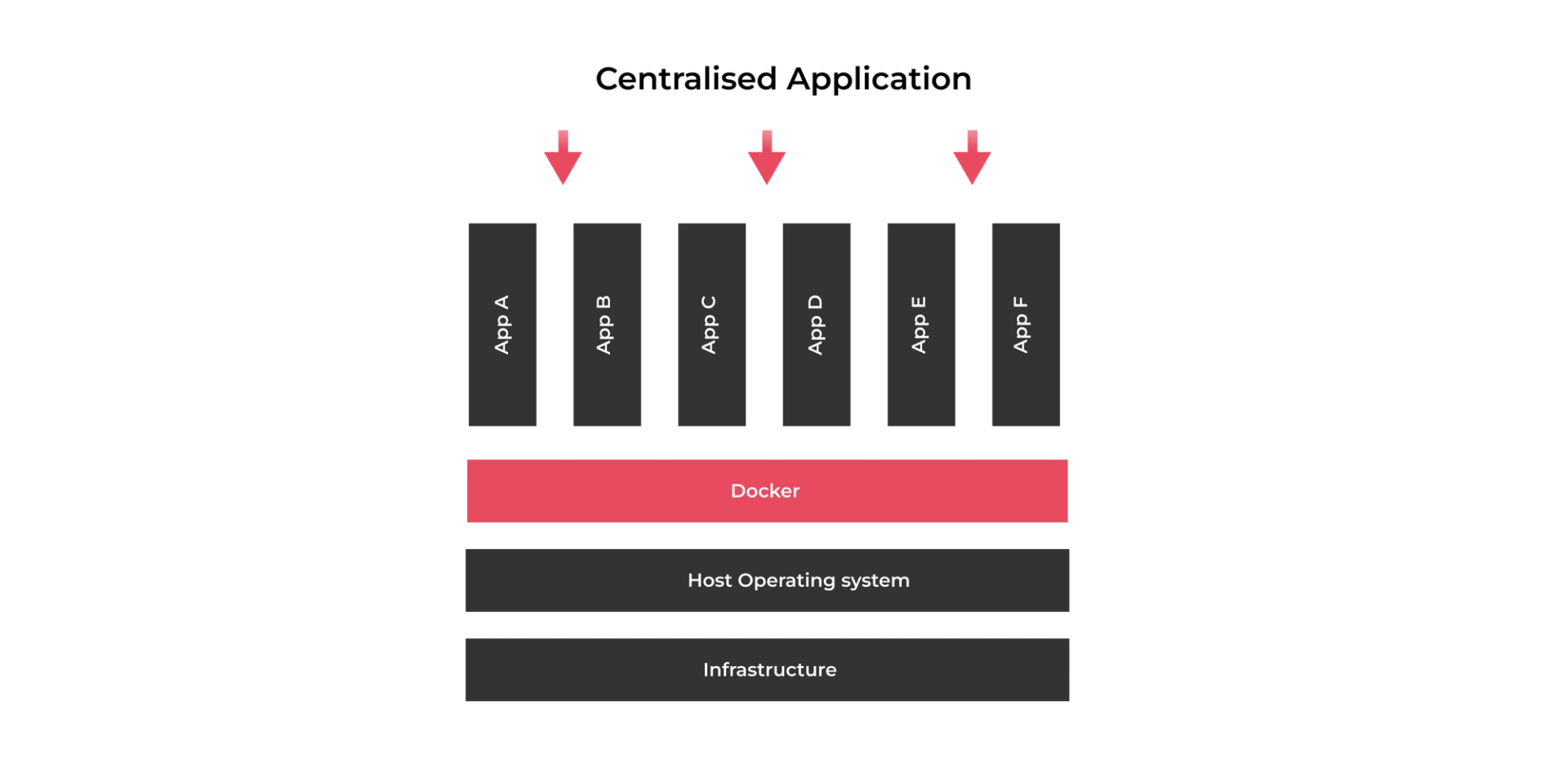

At the application layer, containers are an abstraction that bundles dependencies and code.

On a single machine, several containers can operate independently as separate processes in user space, sharing the OS kernel with one

another.

Compared to virtual machines (VMs), containers require fewer operating systems and VMs and take up less space (container images are typically tens of MBs in size).

VIRTUAL MACHINES

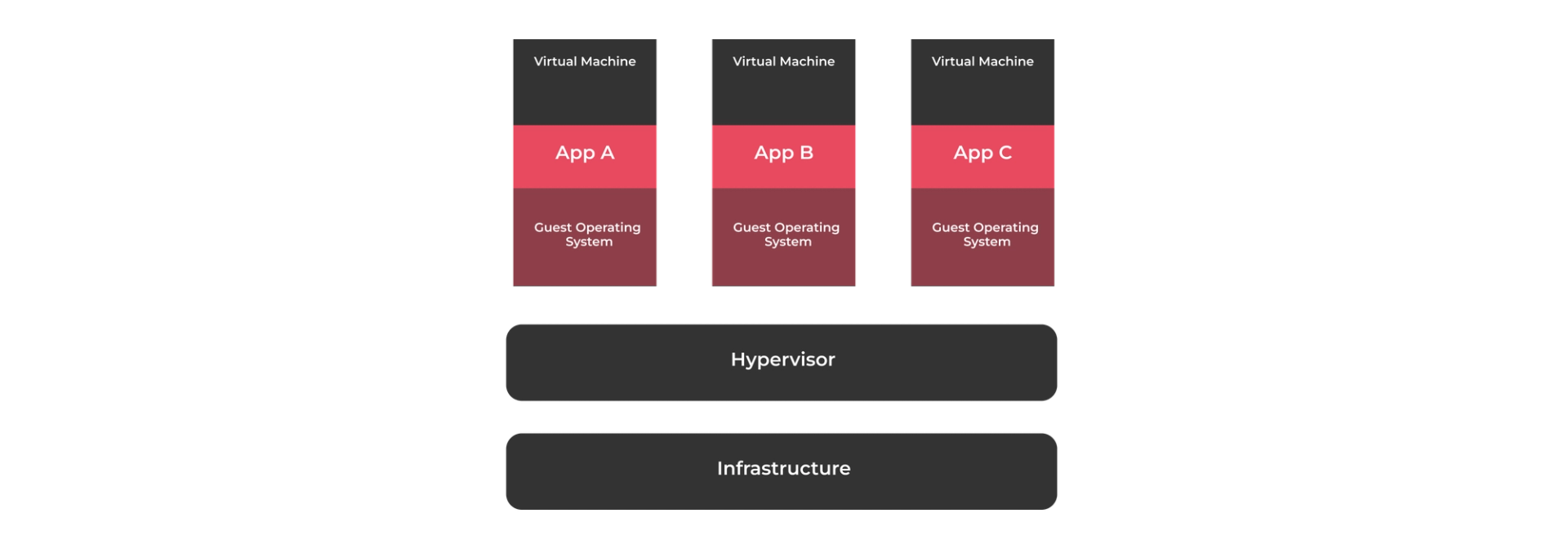

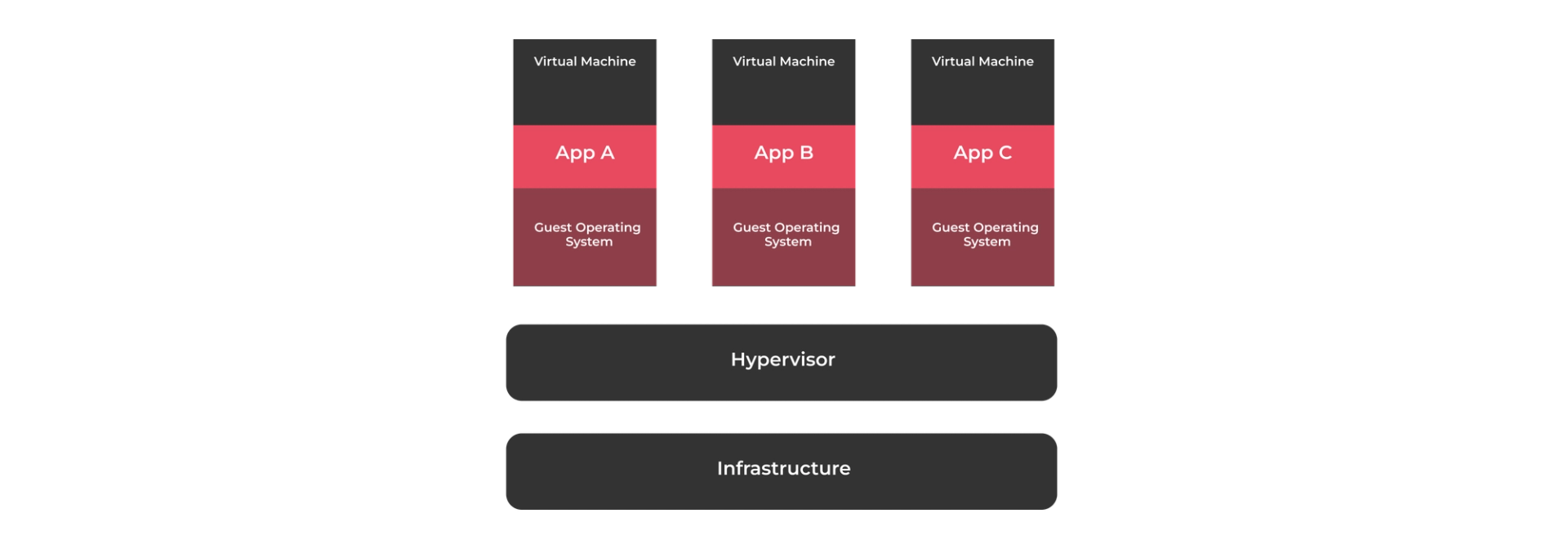

Through the abstraction of real hardware, virtual machines (VMs) allow one server to function as multiple servers.

One machine can operate many virtual machines (VMs) thanks to the hypervisor. Each virtual machine (VM) comes

with a tens of gigabyte-sized complete copy of the operating system, the application, and any required binaries and libraries.

Additionally, virtual machines can take a while to boot.

Although they function differently and have different use cases, Docker and Virtual Machines (VMs) are both technologies used to execute isolated applications and services. The two are contrasted as follows:

Conclusion

Docker is an effective solution that makes the process of creating, launching, and using apps easier. Docker makes it simple to grow your applications, guarantees consistency across various environments, and isolates apps to avoid conflicts. Docker helps simplify the process of managing your development environment and save time, regardless of the size of the project you're working on.

Using Docker can result in more agile software development processes, quicker deployment cycles, and more effective development workflows. Investigate Docker now to see how it can completely change the way you develop and maintain apps.